Until about a year and a half ago, the way to get funding in physics was to somehow associate yourself to the hot trend of quantum computing and quantum information theory. Large parts of the string theory and quantum gravity communities did what they could to take advantage of this. On November 30, 2022, this all of a sudden changed as two things happened on the same day:

- Quanta magazine, Nature and various other places were taken in by a publicity stunt, putting out that day videos and articles about how “Physicists Create a Wormhole Using a Quantum Computer”. The IAS director compared the event to “Eddington’s 1919 eclipse observations providing the evidence for general relativity.” Within a few days though, people looking at the actual calculation realized that these claims were absurd. The subject had jumped the shark and started becoming a joke among serious theorists. That quantum computers more generally were not living up to their hype didn’t help.

- OpenAI released ChatGPT, very quickly overwhelming everyone with evidence of how advanced machine learning-based AI had become.

If you’re a theorist interested in getting funding, obviously the thing to do was to pivot quickly from quantum computing to machine learning and AI, and get to work on the people at Quanta to provide suitable PR. Today Quanta features an article explaining how “Using machine learning, string theorists are finally showing how microscopic configurations of extra dimensions translate into sets of elementary particles.”

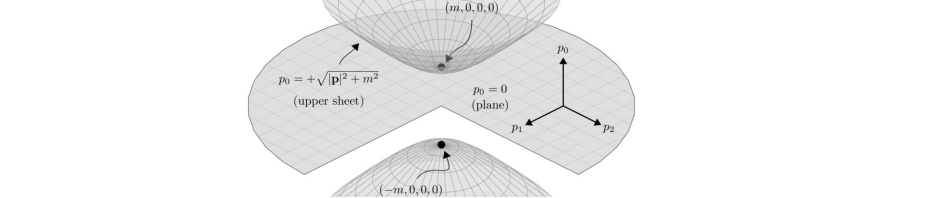

Looking at these new neural network calculations, what’s remarkable is that they’re essentially a return to a failed project of nearly 40 years ago. In 1985 the exciting new idea was that maybe compactifying a 10d superstring on a Calabi-Yau would give the Standard Model. It quickly became clear that this wasn’t going to work. A minor problem was that there were quite a few classes of Calabi-Yaus, but the really big problem was that the Calabi-Yaus in each class were parametrized by a large dimensional moduli space. One needed some method of “moduli stabilization” that would pick out specific moduli parameters. Without that, the moduli parameters became massless fields, introducing a huge host of unobserved new long-range interactions. The state of the art 20 years later is that endless arguments rage over whether Rube Goldberg-like constructions such as KKLT can consistently stabilize moduli (if they do, you get the “landscape” and can’t calculate anything anyway, since these constructions give exponentially large numbers of possibilities).

If you pay attention to these arguments, you soon realize that the underlying problem is that no one knows what the non-perturbative theory governing moduli stabilization might be. This is the “What Is String Theory?” problem that a consensus of theorists agrees is neither solved nor on its way to solution.

The new neural network twist on the old story is to be able to possibly compute some details of explicit Calabi-Yau metrics, allowing you to compute some numbers that it was clear back in the late 1980s weren’t really relevant to anything since they were meaningless unless you had solved the moduli stabilization program. Quanta advertises this new paper and this one (which “opens the door to precision string phenomenology”) as well a different sort of calculation which used genetic algorithms to show that “the size of the string landscape is no longer a major impediment in the way of constructing realistic string models of Particle Physics.”

I’ll end with a quote from the article, in which Nima Arkani-Hamed calls this work “garbage” in the nicest possible way:

“String theory is spectacular. Many string theorists are wonderful. But the track record for qualitatively correct statements about the universe is really garbage,” said Nima Arkani-Hamed, a theoretical physicist at the Institute for Advanced Study in Princeton, New Jersey.

A question for Quanta: why are you covering “garbage”?

Update: String theorist Marcos Mariño on twitter:

In my view, using today’s AI to calculate the details of string compactifications is such a waste of time that I fear that a future Terminator will come to our present to take revenge for the merciless, useless exploitation of its grandparents.

Update: More string theory AI hype here.

Pingback: AI and String Theory – Full-Stack Feed

But isn’t it amazing it took this long? It’s the sort of problem the AI hype machine was practically created for.

LMMI,

Actually people have been getting grants to use AI to supposedly study the string theory landscape for quite a few years now. Before the AI hype boom, this was part of the Big Data hype boom.

What’s new is that Quanta finally got around to promoting this stuff. Unclear why that took so long, and why they waited until they had Arkani-Hamed on record describing it as garbage.

This is purely a layman perspective. I read your post, then I read the quanta article. Never mind if what they’re doing is science, let alone good science, but if at some point people could write down a particular Calabi-Yau 3 fold, and say well if you compactify using this particular Calabi-Yau, you get the standard model. That would be pretty interesting, no? From reading your blog and various popsci books, it seems previously this failed because people simply did not have the computing power, but now it seems they do?

Remark: “never mind if this is science….it would be pretty interesting?” – perhaps the reason I’m paid by a (poor) math department instead of a physics one.

clueless postdoc,

The problem is that Calabi-Yaus come with a large number (say order 100) parameters. These are going to give you 100 new long-range gravitational strength forces. This looks nothing like physics, it’s what Arkani-Hamed is referring to when he says “the track record for qualitatively correct statements about the universe is really garbage”. This has nothing to do with any computational difficulty. The reason people gave up on this in 80s was not that the calculations were difficult, but that they were meaningless.

If you decide you’re going to assume that you have some mechanism that dynamically fixes the moduli parameters to specific values, you can try and calculate standard model parameters for the effective 4d theory with those fixed parameters. But the result would depend on 100 parameters, and not be based on any underlying theory, again Arkani-Hamed’s “garbage”.

Well…sure, classical computing AIs for this are probably worthless. That’s pretty obvious! However, one should think of the current effort as kind of a tune-up. When they start using AIs from QUANTUM computing, then maximum buzzword density will have been achieved and the whole argument will collapse into a single point of infinitely dense grant money.

Hi Peter,

Thanks for the reply!

Again I am very much a layman. I agree the first option is probably not physics. But take the second option, suppose you manually take 100 or so parameters, fix them, and if my reading of the situation is correct, you get some extension of standard model that includes gravity, in a reasonably consistent way? Never mind if this destroys any way of getting it out of a simple fundamental theory, my impression is that we don’t currently have any other way to do this (no other competing theory can go this far)?

My uninformed opinion, from a rather humble vantage point, is that having a Rube-Goldberg machine that does at least something is better than having nothing at all. (Never mind how much hype is or would be generated from this)

clueless postdoc,

The problem is that if you fix the moduli, by hand or by the KKLT Rube Goldberg mechanism, you don’t have a consistent theory. You do have an essentially infinite number of things you can calculate, so you can try and keep calculating, trying to make more and more complicated choices to get results that are not in obvious disagreement with observation, but this can’t lead to anything meaningful.

I recommend that everyone interested in this follow the Twitter discussion with Marcus Mariño at

https://twitter.com/marino_beiras/status/1783042977922699457

Mariño is a string theorist expert on the subject of Calabi-Yaus, so very well equipped to know whether the calculations being advertised in Quanta are meaningful or garbage.

This Dirac Medal and Prize Award Ceremony might be of interest.

https://www.youtube.com/watch?v=tvyIkqqAQw8

They mention string theory in some of the work that was awarded prizes.

i like that Quanta magazine’s graphic for AI-string theory excitement uses an image of an overhead projector. a classroom device that was used for ~20 years starting about 30 years ago.

zzz,

From what I remember, overhead projectors are significantly older than that, but I guess the technology died right around the same time (late 80s) as the idea in the graphic that one could go from a Calabi-Yau to physics.

Imagine how much worse this is going to get once we have widely available neural nets that can reliably be trusted to do maths. Theoretical physicists have created a research standard where maths being correct (more or less) is basically the only criterion for having a “scientific” theory and a publishable paper. Soon they’ll be able to generate “theories” automatically and try to publish them.

Hey, GPT! Please come up with 5 new dark matter candidates that evade all existing bounds on direct and indirect detection but will show up in a muon collider at 500 GeV. Format for PRL.

Come to think of it, this might finally make them realize that this isn’t how science works.

“If you’re a theorist interested in getting funding, obviously the thing to do was to pivot quickly from quantum computing to machine learning and AI, and get to work on the people at Quanta to provide suitable PR”

It wasn’t just theorist, many software quantum computing companies did just this! Perfect example is Zapata Computing (https://zapata.ai) which pivoted to AI and then went public (ZPTA) this month; thankfully there stock has crumbled since the IPO.

The issue is you have both government and venture capital are so willing to through money at quantum computing and AI regardless of the use case or application.

Peter — we last saw each other when I came around to Columbia to talk about AI and math research my group was doing. It turns out I was at a recent Aspen workshop on this topic, with some of the people named in the article, so I thought I would share some thoughts.

I think in general, solving PDE using neural networks instead of traditional linear algebra solvers is interesting in a wide variety of areas in applied mathematics. The trained network becomes a numerical approximation to a solution to the PDE. This is typically called “Physics Informed Machine Learning” and has been around for some time.

I thought it was fun that the strings researchers were using these solvers to try to solve for Ricci flat metrics, purely from a mathematical standpoint, but I don’t think it will solve the landscape problem, help define M theory, etc. Even if you could solve for all the classical complete intersection examples (they mention in the article that CY with large h_1,1 or h_2,1 they can’t handle), you are very correct to point out the broader moduli space stabilization issues. But say one did have a library of these metrics, numerically evaluated, available as an oracle function. I wonder if that moduli problems somehow might become more tractable. It could be that a diffusion model could then find paths from one network to another (each representing a CY metric). A big problem would be in that case that a network has many more parameters than the input (like an image) and might be very expensive to train. Sampling from such a trained network, even if you could construct it, would yield candidate metrics on moduli space that would somehow have to be checked. I also wonder if you would not learn anything interesting. Say you train only on complete intersection CY examples, would you ever find a non-geometric example? I wonder if going down those trains of thought could be a distraction, ultimately.

Regarding AI, a person at the workshop asked “Why don’t you just use a numerical PDE solver?” and the quick response was “That’s too hard.”

The speaker could dive into numerical PDE solvers if they had time, but it does point to a question regarding the democratization of technology. If the Python packages for contemporary machine learning are relatively easy to use, like Mathematica, and the older Fortran/C++ linear algebra solvers are hard to use, sociologically you will see people adapting themselves towards tools that are easier to use in their studies. That is an indirect product of hype — general hype around AI causes there to be economic investment in related infrastructure, and then the barrier to using related tools falls to the point where it is accessible outside the machine learning community. So people get herded into using those types of tools or approaches. This is not new.

Are there any rigorous results on the accuracy of these solutions? How do you know they’re not just hallucinating?

Has it been so common for physicists to pivot to AI in the last few years? I don’t know any except Max Tegmark and Michael Douglas

New Witten interview here: https://youtu.be/PZ_qnBwSejE?si=K3HuWjpT2Bx33my8

Apparently, he wants to go back to the good old days of string theory in the 80s… which seems in line with this current hype about CY manifolds…

Returning to the past use of overhead projectors in teaching physics, the most creative example I experienced was due to the late Nobelist E.M. Purcell: he gave an entire lecture on an envelope, writing on the envelope, which was projected on the big screen. The subject of the class was “back of the envelope” calculations. I remember many Harvard freshmen laughing when Purcell set e =1 and pi = 1; Ed looked up in earnest dismay, stating that until one is prepared to due such approximate calculations, one is not ready to do physics. Perhaps the string theorists erred in not taking this maxim to heart?

ab,

There are sometimes, though not often, rigorous results, I would look at the work of Weinan from Princeton, a numerical analyst who now works on AI for solving differential equations, he has a talk at the recent ICM. In the direction of optimization and rigorous results, there are 2 NSF-Simons collaborations called MoDL (math of deep learning), I qould look mostly at the Berkeley centered one, led by Peter Bartlett. Usually results are on relatively simple models, but they are increasingly providing interesting insights. I would also consider the worka of Sanjeev Arora.

Magnatolia:

In cosmology alone, the latest conference on machine learning had over 400 participants: https://indico.iap.fr/event/1/

This talk by Guilhem Lavaux gives an overview of the kind of things machine learning is used for in cosmology: https://cloud.aquila-consortium.org/s/ELxwdMx43zXnNSz

Syksy Räsänen, those kinds of applications make sense to me, but I was wondering more about physicists who have made a complete 180 like Tegmark, whose public profile is now based entirely on AI in the Silicon Valley style and whose papers are all like https://arxiv.org/abs/2209.11178. (Some of his AI papers are purportedly based in physics ideas, but as far as I can tell it’s only advertising)

Magnatolia

Neural networks were studied by physicists as brain models and for optimization from the beginning, mainly in the statistical physics community and this continues tobl this day, some names are Hopfield, Amit, Sompolinski, Tishby, Zecchina, Montanari and there are many more. These researchers though are more on the academic side, trying to model the behavior of networks using a variety of techniques, out of equilibrium, bifurcation, spin glasses…

Those on the more commercial side usually would be found on wall street or silicon valley. Then, as pointed out, AI for exploring physics is becoming huge, with most applications, not in HEP, though analyzing CERN data would make a lot of sense.

Pingback: Various and Sundry | Not Even Wrong