There’s been very little blogging here the past month or so. For part of the time I was on vacation, but another reason is that there just hasn’t been very much to write about. Today I thought I’d start looking at the talks from this week’s Strings 2024 conference.

The weird thing about this version of Strings 20XX is that it’s a complete reversal of the trend of recent years to have few if any talks about strings at the Strings conference. I started off looking at the first talk, which was about something never talked about at these conferences in recent years: how to compactify string theory and get real world physics. It starts off with some amusing self-awareness, noting that this subject was several years old (and not going anywhere…) before the speaker was even born. It rapidly though becomes unfunny and depressing, with slides and slides full of endless complicated constructions, with no mention of the fact that these don’t look anything like the real world, recalling Nima Arkani Hamed’s recent quote:

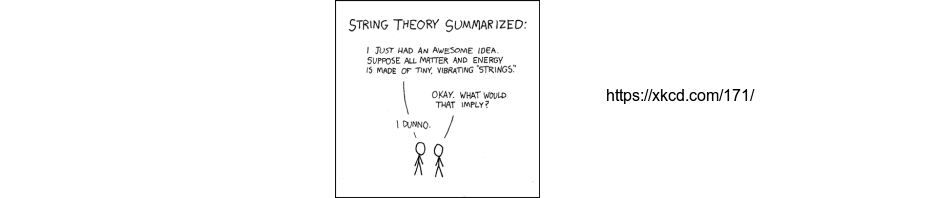

“String theory is spectacular. Many string theorists are wonderful. But the track record for qualitatively correct statements about the universe is really garbage”

The next day started off with Maldacena on the BFSS conjecture. This was a perfectly nice talk about an idea from 25-30 years ago about what M-theory might be that never worked out as hoped.

Coming up tomorrow is Jared Kaplan explaining:

why it’s plausible that AI systems will be better than humans at theoretical physics research by the end of the decade.

I’m generally of the opinion that AI won’t be able to do really creative work in a subject like this, but have to agree that likely it will soon be able to do the kind of thing the Strings 2024 speakers are talking about better than they can.

The conference will end on Friday with Strominger and Ooguri on The Future of String Theory. As at all string theory conferences, they surely will explain how string theorists deserve an A+++, great progress is being made, the future is bright, etc. They have put together a list of 100 open questions. Number 83 asks what will happen now that the founders of string theory are retiring and dying off, suggesting that AI is the answer:

train an LLM with the very best papers written by the founding members, so that it can continue to set the trend of the community.

That’s all I can stand of this kind of thing for now without getting hopelessly depressed about the future. I’ll try in coming weeks to write more about very different topics, and stop wasting time on the sad state of affairs of a field that long ago entered intellectual collapse.

Update: The slides for the AI talk are here. The speaker is Jared Kaplan, a Johns Hopkins theorist who is a co-founder of Anthropic and on leave working as its Chief Science Officer. His talk has a lot of generalities about AI and its very fast progress, little specifically about AI doing theoretical physics.

Did anyone who consented to this being made public know anything about LLMs??

As with many AI afficionados, these guys seem to have forgotten or, (more cynically) ignored the fact that even here, the “Garbage In, Garbage Out” principle still applies.

The first talk, by Montero, addresses the key issue but its conclusions look scientifically unserious: «There’s constantly new stuff going on. It’s extremely exciting… We can chart the String Landscape and find where we are in it» should be replaced with «If the string landscape has ≈ 10^500 vacua we have no idea how to find if we are in it, we got stuck, it’s extremely frustrating».

It is not true that there has been nothing to blog about. I would be extremely interested in a post about the recent proof of geometric Langlands.

Almost as depressing as the LLM bit is the idea that the ‘founding members’ should have anything to do with guiding the future direction of the field. I can’t think of any other subject where you could say something like that with a straight face.

hrdlifil,

The problem with writing about the geometric Langlands proof is that I don’t know much about it, and lack the time/energy it would take to learn enough to write something useful. Here is where I need guest bloggers…

Fred J.,

What’s remarkable about the string theory community is that they seem quite happy with the subject’s turn to producing what Arkani-Hamed identifies as garbage, to the point of finding an automated system for producing greater amounts of garbage an attractive vision for the future.

Felix,

The idea seems to be to find someway to get around Planck’s insight into scientific progress (“one funeral at a time”).

training LLMs and then ask them to theorize about strictily quantitative aspects is not going to lead anywhere. LLMs are pretty bad in quantitative reasoning, at least not as good as in text-related queries.

For the matter at hand, string theory and more purely theoretical subjects with deep math background, it’s completely hopeless to do so. And I am sure that some people have already attempted that, feeding pdfs massively about a subject and ask questions about it. At least I did.

Also check this Nature paper:

https://www.nature.com/articles/s42254-023-00581-4

(Science in the age of large language models)

“train an LLM with the very best papers written by the founding members, so that it can continue to set the trend of the community”

I echo the sentiment of David Roberts.

I do not understand physics enough to judge or criticise string theory. I do know about LLMs. I am in shock at the stupidity of that statement. My secondary reaction is: oh, how I’m feeling now is maybe how it has felt for you all for the past 20+ years.

Note that Yuji Tachikawa, who is behind the question and, presumably, also the corresponding hint ” Train an LLM …” has a penchant for humorous paper titles and/or acknowledgements (see e.g. 1508.06679 or the acknowledgements in 1312.2684 and 2203.16689). I am pretty sure that the suggestion to train a language model on some papers was really just a joke.

@Cobi

that’s what I hope. It undermines what I guess is the point of the document, and given that other people are talking, in total sincerity, about AI exploring the Landscape as a way to move forward, a worrying thought that people might take it seriously.

The work I respect the most on the mathematics underlying the hypothetical M-theory (divorced from any judgement about its relation to our universe), has been such that it could not have been extrapolated from “the very best papers written by the founding members, so that it can continue to set the trend of the community.”

Cobi, surely the Tachikawa question is just a joke, but organisers wrote “100 questions… all of them very interesting” instead of selecting really interesting questions. That’s the problem.

I don’t understand how anyone can claim that LLMs as they are currently implemented can really lead to serious progress in something like theoretical physics when they manifestly are incapable of even elementary quantitative understanding. Show me a single LLM that correctly answers the question “Which weighs more, one pound of feathers or two pounds of hammers?”. The latest enterprise-level ChatGPT (along with every other one I’ve tried) says that they weigh the same, because that question is *almost* the same wording as a famous “trick” question. When LLMs can’t reliably produce the accurate statement that two is greater than one, forgive me for not having much faith that they will produce deep insights into quantum gravity.

I didn’t mean to suggest that Yuji’s question was a joke, just the corresponding hint. Whether the questions should have been further curated by the organisers is i guess a matter of taste. If the purpose was to have an open brainstorming session, then not making a preselection is kind of the point.

@Douglas GPT4 answered it correctly: “Two pounds of hammers weigh more than one pound of feathers. Two pounds is always heavier than one pound, regardless of what the items are.”

Douglas Natelson,

I don’t think the question is whether LLMs will produce deep insights into quantum gravity, but whether they can do what current string theorists are doing as well or better. “Insights into quantum gravity” is not what we’re getting from current string theory research, take a look at the talks at Strings 2024 to see what we are getting.

String theorists are already claiming to use LLMs to do original string theory research, in particular setting them to work on calculating things involving huge numbers of constructions of supposed string vacua and approximations to Calabi-Yau metrics. Kaplan’s talk would have been more interesting if he looked at this sort of thing and where it is going. Such calculations are part of Arkani-Hamed’s “garbage”, and LLMs are already happily doing them. That they will soon (if not already) be able to do them better and faster than human string theorists is quite plausible. LLMs should also be able to use the corpus of tens of thousands of worthless string theory papers to get as good as humans at generating and publishing papers, at writing grant proposals and getting them funded.

In addition, there’s a huge amount of money out there looking for “AI” projects to fund, and a huge appetite from science journalists like those at Quanta for stories about the wonders of how AI will lead to great research progress.

I wish Kaplan had been talking about these topics rather than the more generic topics he chose.

About the Tachikawa question, see this exchange on twitter

https://x.com/AlessandroStru4/status/1798631213168840956

I think Tachikawa is (seriously) motivated by a very real problem for string theory that Strumia points to. The generation of the “First Superstring Revolution” was trained in real physics and did their formative research in QFT, but those trained from 1984 on often skipped lightly over this and their formative years were spent mastering technical issues in string theory. I’ve become increasingly pessimistic that theorists who start their career with that kind of training will ever do anything other than keep working down the blind alley they were sent down at the beginning of their career.

Worse than this is that the past 20 years or more of string theorists should have been well aware that this was a dead or dying field that hadn’t worked out, but decided to devote their lives to it anyway. The whole subject has gone from attracting smart intellectually ambitious young people with the talent to work on promising new ideas to attracting instead intellectually ambitious young people unable to see the difference between a promising new idea and a failed one.

@Meng, google’s Gemini got it right, after I posted. Mea culpa. GPT via enterprise-level Bing Copilot got it wrong as of this morning. Perplexity got it wrong last night and gets it correct this morning. Interesting.

@Peter, I have no doubt that certain rote-like calculations (keep grinding through order by order diagrams, where there are patterns in what is being done) could be automated well. LLMs are already quite good at spitting out python code for common tasks based on what they’ve scraped from github. Still, fundamental lack of math literacy has to be a showstopper for many points of interest to physicists. Anyway, enough out of me.

The explosion of new data and observation in cosmology, and theory papers resulting from it seems very promising and interesting. The work on the cosmological constant, candidates for dark matter, gravity from entanglement, etc seems quite interesting.

Much more interesting than the dead horse named String Theory.

The notion of training current LLM architectures on theoretical physics papers and expecting anything serious to emerge is laughable; however, the notion of using an approach similar to what was done with AlphaFold, but to search for ‘compactifications of string theory to get real world physics’, doesn’t seem unreasonable. IF such a search could be made exhaustive and came up empty, wouldn’t this falsify string theory? At least it seems it would be much better to have a machine spinning its wheels doing this than physicists.

Brett Smolenski,

You don’t need AI to investigate the question of whether string theory makes any predictions about the real world. Just ask any of the experts working in the field. This experiment has now often been done, with the result that the honest answer is simply no. The people working on this know there are no predictions, they just believe that there’s still some hope of a breakthrough which would lead to predictions.

You will sometimes find people claiming that there are predictions. In all cases though either the people doing this don’t know what they’re talking about, or are using a non-standard meaning for “prediction”. Vafa for example these days often claims that string theory makes “predictions”, but he also says that if the “prediction” turns out to be wrong it doesn’t mean that there’s anything wrong with string theory.

Hi Peter,

Just want to say thank you. I was an advanced theoretical physics undergrad a few years ago hoping to do string theory as research (spurred on by Brian Greene’s highly irresponsible book). Your blog (and your book) made me realise the sad state of affairs in the string theory community (as exemplified in Strings 2024) and I switched to math. Now, every time that I see the status of string theory now, I want to thank you.

Researcher in over-hyped, speculative physics pivots to researcher in over-hyped subset of AI and now claims that the latter will magically help with the former! You can’t make this stuff up.

“LLMs should also be able to use the corpus of tens of thousands of worthless string theory papers.” This statement includes a serious threat to advancement of scientific knowledge unless existing policies of citation of copyrighted materials are enforced.

LLMs do not credit original sources for learning materials that are protected by copyrights. The citation of previous work provides three benefits: 1. Potential verification or indictment of the validity of source material; 2. Credit for prior work to scholars who depend upon such credit for general reputation enhancement including promotions and grants. 3. Credit to professional societies and publishers for providing outlets for the original research work.

Not to worry. I asked ChatGPT to speak in what it takes to be the voices of Susskind, Witten, Kaku, Motl and others regarding String Theorists’ past pronouncements on the advantages of String Theory over all other approaches.

Here are the results:

———————-

ChatGPT:

“Your approach to quantum gravity is so outdated, it belongs in a history book, not a physics journal.”

“Comparing your theory to String Theory is like comparing a horse-drawn carriage to a spaceship—quaint, but hopelessly obsolete.”

“If your theory had any less predictive power, it would be a random number generator.”

“You know, your work might have merit—in an alternate universe where String Theory doesn’t dominate.”

“Your calculations are charmingly quaint, like a child’s attempt to explain the cosmos with crayons.”

“String Theory has elegance, beauty, and predictive power. Your theory has… well, it exists, I suppose.”

“I haven’t seen this many dead ends since the last maze I solved. Have you considered taking up puzzles instead of physics?”

“Your attempts at a unified theory are like a high school science project compared to the grandeur of M-Theory.”

“In the realm of theoretical physics, String Theory is a symphony. Your work, I’m afraid, is just background noise.”

“M-Theory is the only game in town, and it looks like you’re still trying to figure out the rules of checkers.”

———-

Well, while I may prefer the originals, by November, this should be much closer. And, it’s not that far off now of what we have to show for 41 years of String Theoretic leadership in fundamental physics.

@ Bob Y,

We humans also don’t cite the textbooks and papers we’ve read to become proficient in a field. There is nothing wrong with this.

See Scott Aaronson’s blog for a link to another argument that we’re just a very few years away from AI going “superintelligent”. Thinking again about Brett Smolenski’s comment, maybe the way things will work out is that superintelligent AI will not crank out more garbage research, but quickly reach the conclusion that string theory is a failed research program not worth pursuing. If that happens, what will be the reaction of the (human) physics research community?

Before that happens, AI should be able to resolve some simpler questions about part of the string theory research program, such as

1. Is the string theory landscape just pseudoscience?

2. Does the “Swampland Program” make any sense at all?

From the last bit of Strings 2024, it seems the situation is that there will be a Strings 2025 in January in Abu Dhabi, but no one yet has stepped up to organize a Strings 2026. Maybe it won’t be necessary?

Reading this Woits piece, I had a flashback to the famous Bogdanov affair, where a PhD thesis on quantum gravity of questionable quality was approved and defended. An advisor who approved the thesis topic said: ‘All these were ideas that could possibly make sense. It showed some originality and some familiarity with the jargon. That’s all I ask.’ LLMs are excellent at ‘familiarity with the jargon’. Feeding AI hype into the string machine? Sounds like a lot of funding.

Sadly that person was Roman Jackiw. I imagine he was back-pedalling furiously.

@Smolensky: “an approach similar to what was done with AlphaFold, but to search for ‘compactifications of string theory to get real world physics’, doesn’t seem unreasonable.”

But you can test (in vitro/cristallography/ect) the results of AlphaFold.

And the fortune of AlphaFold (now AlphaFold3) is that is very very close to wetlab results.

alphafold and the protein structure problem is different , there are many thousands

of solved proteins to learn from. in string theory there is nothing like that.