For the latest on quantum gravity, readers might want to look at talks from some events of the last couple weeks. At the new ICTP-SAIFR theoretical physics institute in Sao Paulo, a school on quantum gravity has talks available here, with a follow-up workshop here. At Stanford last week the topic was Frontiers of Quantum Gravity and Cosmology, in honor of Renata Kallosh and Stephen Shenker.

Matt Strassler was at the Stanford conference, and he blogs about it here, describing most of the speakers as “string theorists” who are no longer working on string theory, and most of the quantum gravity talks as not being about string theory (this is also true of the ICTP-SAIFR workshop). I don’t really understand his comment

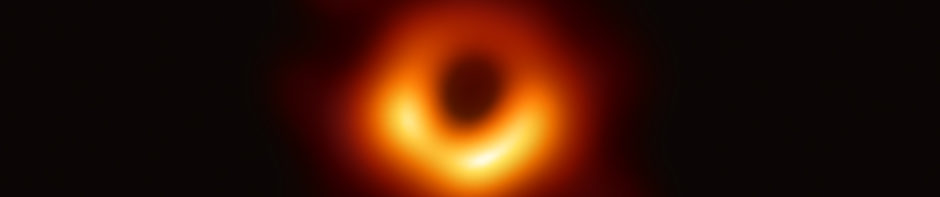

Why has the controversy gone on so long? It is because the mathematics required to study these problems is simply too hard — no one has figured out how to simplify it enough to understand precisely what happens when black holes form, radiate particles, and evaporate.

since the problem isn’t “too hard” mathematics, but the lack of a consistent theory (which he makes clear later in the posting).

Most remarkably, he described the talk by Lenny Susskind, one of the leading promoters of string theory, as follows:

Susskind stated clearly his view that string theory, as currently understood, does not appear to provide a complete picture of how quantum gravity works. Well, various people have been saying this about string theory for a long time (including ‘t Hooft, and including string theory/gravity experts like Steve Giddings, not to mention various experts on quantum gravity who viscerally hate string theory). I’m not enough of an expert on quantum gravity that you should weight my opinion highly, but progress has been so slow that I’ve been worried about this since around 2003 or so. It’s remarkable to hear Susskind, who helped invent string theory over 40 years ago, say this so forcefully. What it tells you is that the firewall puzzle was the loose end that, when you pulled on it, took down an entire intellectual program, a hope that the puzzles of black holes would soon be resolved. We need new insights — perhaps into quantum gravity in general, or perhaps into string theory in particular — without which these hard problems won’t get solved.

For many years now, the most influential figures in string theory have given up on the idea of using it to say anything about particle physics, and results from the LHC have put nails in that coffin, removing the small remaining hope that SUSY or extra dimensions would be seen at the TeV scale. The “firewall” paradox seems to have made it clear that string theory-inspired AdS/CFT doesn’t resolve the problem of non-perturbative quantum gravity, leading to renewed interest in other approaches. This leaves string theory now as just a “tool” to be used to study topics like heavy-ion physics. Things don’t seem to be working out very well there either.