Besides the Hawking book, which was a disappointment in many ways, I recently also finished reading a much better and more interesting book which deals with some of the same topics, but in a dramatically more substantive and intelligent manner. The Shape of Inner Space is a joint effort of geometer Shing-Tung Yau and science writer Steve Nadis. Yau is one of the great figures in modern geometry, a Fields medalist and current chair of the Harvard math department. He has been responsible for training many of the best young geometers working today, as well as encouraging a wide range of joint efforts between mathematicians and physicists in various areas of string theory and geometry.

Yau begins with his remarkable personal story, starting out with a childhood of difficult circumstances in Hong Kong. He gives a wonderful description of the new world that opened up to him when he came to the US as a graduate student in Berkeley, where he joyfully immersed himself in the library and a wide range of courses. Particularly influential for his later career was a course by Charles Morrey on non-linear PDEs, which he describes as losing all of its students except for him, many off to protest the bombing of Cambodia.

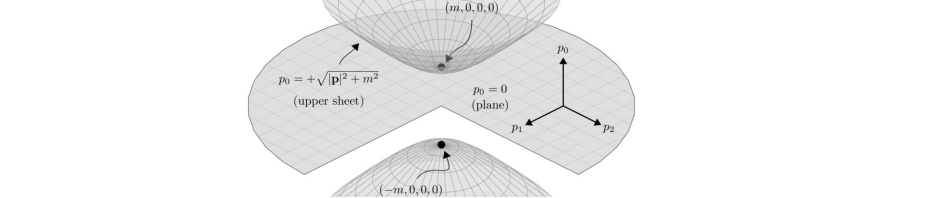

He then goes on to tell some of the story of his early career, culminating for him in his proof of the Calabi conjecture. This conjecture basically says that if a compact Kahler manifold has vanishing first Chern class (a topological condition), then it carries a unique Kahler metric satisfying the condition of vanishing Ricci curvature. It’s a kind of uniformisation theorem, saying that these manifolds come with a “best” metric. Such manifolds are now called “Calabi-Yau manifolds”, and while the ones of interest in string theory unification have six dimensions, they exist in all even dimensions, in some sense generalizing the special case of an elliptic curve (torus) among two-dimensional surfaces.

Much of the early part of the book is concerned not directly with physics, but with explaining the significance of the mathematical subject known as “geometric analysis”. Besides the Calabi conjecture, Yau also explains some of the other highlights of the subject, which include the positive-mass conjecture in general relativity, Donaldson and Seiberg-Witten theory, and the relatively recent proof of the Poincare conjecture. Some readers may find parts of this heavy-going, since Yau is ambitiously trying to explain some quite difficult mathematics (for instance, trying to explain what a Kahler manifold is). Having tried to do some of this kind of thing in my own book, I’m very sympathetic to how difficult it is, but also very much in favor of authors giving it a try. One may end up with a few sections of a book that only a small fraction of its intended audience can really appreciate, but that’s not necessarily a bad thing, and arguably much better than having content-free books that don’t even try to explain to a non-expert audience what a subject is really about.

A lot of the book is oriented towards explaining a speculative idea that I’m on record as describing as a failure. This is the idea that string theory in ten-dimensions can give one a viable unified theory, by compactification of six of its dimensions. When you do this and look for a compact six-dimensional manifold that will preserve N=1 supersymmetry, what you find yourself looking for is a Calabi-Yau manifold. Undoubtedly one reason for Yau’s enthusiasm for this idea is his personal history and having his name attached to these. Unlike other authors though, Yau goes into the question in depth, explaining many of the subtleties of the subject, as well as outlining some of the serious problems with the idea.

I’ve written elsewhere that string theory has had a huge positive effect on mathematics, and one source of this is the array of questions and new ideas about Calabi-Yau manifolds that it has led to. Yau describes a lot of this in detail, including the beginnings of what has become an important new idea in mathematics, that of mirror symmetry, as well as speculation (“Reid’s fantasy”) relating the all-too-large number of classes of Calabi-Yaus. He also explains something that he has been working on recently, pursuing an idea that goes back to Strominger in the eighties of looking at an even larger class of possible compactifications that involve non-Kahler examples. One fundamental problem for string theorists is that of too many Calabi-Yaus already, so they’re not necessarily enthusiastic about hearing about more possibilities:

University of Pennsylvania physicist Burt Ovrut, who’s trying to realize the Standard Model through Calabi-Yau compactifications, has said he’s not ready to take the “radical step” of working on non-Kahler manifolds, about which our mathematical knowledge is presently quite thin: “That will entail a gigantic leap into the unknown, because we don’t understand what these alternative configurations really are.”

Even in the simpler case of Calabi-Yaus, a fundamental problem is that these manifolds don’t have a lot of symmetry that can be exploited. As a result, while Yau’s theorem says a Ricci-flat metric exists, one doesn’t have an explicit description of the metric. If one wants to get beyond calculations of crude features of the physics coming out of such compactifications (such as the number of generations), one needs to be able to do things like calculate integrals over the Calabi-Yau and this requires knowing the metric. Yau explains this problem, and how it has hung up any hopes of calculating things like fermion masses in these models. He gives a general summary of the low-level of success that this program has so far achieved, and quotes various string theorists on the subject:

But there is considerable debate regarding how close these groups have actually come to the Standard Model… Physicists I’ve heard from are of mixed opinion on this subject, and I’m not yet sold on this work or, frankly, on any of the attempts to realize the Standard Model to date. Michael Douglas… agrees: “All of these models are kind of first cuts; no one has yet satisfied all the consistency checks of the real world”…

So far, no one has been able to work out the coupling constants or mass…. Not every physicist considers that goal achievable, and Ovrut admits that “the devil is in the details. We have to compute the Yukawa couplings and the masses, and that could turn out completely wrong.”

Yau explains the whole landscape story and the heated debate about it, for instance quoting Burt Richter about landscape-ologists (he says they have “given up.. Since that is what they believe, I can’t understand why they don’t take up something else – macrame for example.”) He describes the landscape as a “speculative subject” about which he’s glad to, as a mathematician, not have to take a position:

It’s fair to say that things have gotten a little heated. I haven’t really participated in this debate, which may be one of the luxuries of being a mathematician. I don’t have to get torn up about the stuff that threatens to tear up the physics community. Instead, I get to sit on the sidelines and ask my usual sorts of questions – how can mathematicians shed light on this situation?

So, while I’m still of the opinion that much of this book is describing a failed project, on the whole it does so in an intellectually serious and honest way, so that anyone who reads it is likely to learn something and to get a reasonable, if perhaps overly optimistic summary of what is going on in the subject. Only at a few points do I think the book goes a bit too far, largely in two chapters near the end. One of these purports to cover the possible fate of the universe (“the fate of the false vacuum”) and the book wouldn’t lose anything by dropping it. The next chapter deals with string cosmology, a subject that’s hard to say much positive about without going over the edge into hype.

Towards the end of the book, Yau makes a point that I very much agree with: fundamental physics may get (or have already gotten..) to the point where it can no longer rely upon frequent inspiration from unexpected experimental results, and when that happens one avenue left to try is to get inspiration from mathematics:

So that’s where we stand today, with various leads being chased down – only a handful of which have been discussed here – and no sensational results yet. Looking ahead, Shamit Kachru, for one, is hopeful that the range of experiments under way, planned, or yet to be devised will afford many opportunities to see new things. Nevertheless, he admits that a less rosy scenario is always possible, in the even that we live in a frustrating universe that affords little, if anything in the way of empirical clues…

What we do next, after coming up empty-handed in every avenue we set out, will be an even bigger test than looking for gravitational waves in the CMB or infinitesimal twists in torsion-balance measurements. For that would be a test of our intellectual mettle. When that happens, when every idea goes south and every road leads to a dead end, you either give up or try to think of another question you can ask – questions for which there might be some answers.

Edward Witten, who, if anything, tends to be conservative in his pronouncements, is optimistic in the long run, feeling that string theory is too good not to be true. Though, in the short run, he admits, it’s going to be difficult to know exactly where we stand. “To test string theory, we will probably have to be lucky,” he says. That might sound like a slender thread upon which to pin one’s dreams for a theory of everything – almost as slender as a cosmic string itself. But fortunately, says Witten, “in physics there are many ways of being lucky.”

I have no quarrel with that statement and more often than not, tend to agree with Witten, as I’ve generally found this to be a wise policy. But if the physicists find their luck running dry, they might want to turn to their mathematical colleagues, who have enjoyed their fair share of that commodity as well.

Update: I should have mentioned that the book has a web-site here, and there’s a very good interview with Yau at Discover that covers many of the topics of the book.

Update: There’s more about the book and an interview with Yau here.