Emanuel Derman has a fascinating new book out, Models.Behaving.Badly, which I’ve been intending to write about here for a while now. One of the problems that has kept delaying this is that every time I start to write something I notice that a new review of the book is out, and it seems to me quite a bit better than anything I have to say. An example that comes to mind is this review from Cathy O’Neil at mathbabe a while ago, another is a new one out today at Forbes. Any book that attracts so many thoughtful reviews has to be worth reading as well as capturing something important about what is going on in the world.

There is a somewhat frivolous virtue of the book that I haven’t noticed other reviews discussing: the wonderful title. I can’t help but enjoy the double meaning linking two of the out-of-control groups that are features of downtown life here in New York. One might think that drunken fashion models in downtown clubs and quants at financial firms don’t have a lot to do with each other, and yet…

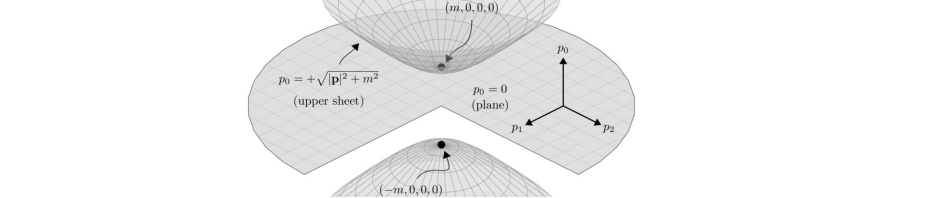

Derman’s first book, My Life as a Quant (which I wrote a little bit about here in the early days of this blog) tells the story of his move from high energy theory into financial modeling. He was one of the first to do this, and that book reflects a time when the financial industry was riding high, with the role of quants and their models relatively uncontroversial. With his new book, he’s also one of the first, this time in his disillusioned but serious look from the inside at where we are today:

I am deeply disillusioned by the West’s response to the recent financial crisis. Though chance doesn’t treat everyone fairly, what makes the intrinsic brutalities of capitalism tolerable is the principle that links risks and return: if you want to have a shot at the up side, you must be willing to suffer the down. In the past few years that principle has been violated.

His focus is on the role that models inspired by physics have played in this debacle, arguing that they have been used in a fundamentally misconceived way, and explaining the evolution of his own understanding:

… I began to believe it was possible to apply the methods of physics successfully to economics and finance, perhaps even to build a grand unified theory of securities.

After twenty years on Wall Street I’m a disbeliever. The similarity of physics and finance lies more in their syntax than their semantics. In physics you’re playing against God, and He doesn’t change His laws very often. In finance you’re playing against God’s creatures, agents who value assets based on their ephemeral opinions. The truth therefore is that there is no grand unified theory of everything in finance. There are only models of specific things.

Much of the book is devoted to explicating his views about the importance of distinguishing between “theories” that are supposed to accurately capture phenomena, and “models”, which are metaphors which which only approximately capture some aspects of phenomena. As examples of theories, he discusses not just QED, the quintessential accurate theory of the physical world, but also Spinoza’s theory of the emotions. Besides the financial models that are the focus of the book, he also covers a wide range of other failed models. The book begins with a short memoir of growing up in South Africa, where he was a member of a Zionist youth organization, and its failed models as well as the racial ones of apartheid played a role in his coming of age.

If you’re part of the modern world which generally finds actual books too long and time-consuming to read, Derman has an often enjoyable blog, and for the truly ADD-afflicted, he also has one of the very few twitter feeds I’ve seen worth following.