The hundreds of expository articles about supersymmetry written over the last twenty years or more tend to begin by giving one of two arguments to motivate the idea of supersymmetry in particle physics. The first of these goes something like “supersymmetry unifies bosons and fermions, isn’t that great?” This argument doesn’t really make a whole lot of sense since none of the observed bosons or fermions can be related to each other by supersymmetry (basically because there are no observed boson-fermion pairs with the same internal quantum numbers). So supersymmetry relates observed bosons and fermions to unobserved, conjectural fermions and bosons for which there is no experimental evidence.

Smarter people avoid this first argument since it is clearly kind of silly, and use a second one: the “fine-tuning” argument first forcefully put forward by Witten in lectures about supersymmetry at Erice in 1981. This argument says that in a grand unified theory extension of the standard model, there is no symmetry that can explain why the Higgs mass (or electroweak symmetry breaking scale) is so much smaller than the grand unification scale. The fact that the ratio of these two scales is so small is “unnatural” in a technical sense, and its small size must be “fine-tuned” into the theory.

This argument has had a huge impact over the last twenty years or so. Most surveys of supersymmetry begin with it and it justifies the belief that supersymmetric particles with masses accessible at the LHC must exist. Much of the experimental program at the Tevatron and the LHC revolve around looking for such particles. If you believe the fine-tuning argument, the energy scale of supersymmetry breaking can’t be too much larger than the electroweak symmetry breaking scale, i.e. it should be in the range of 100s of Gev- 1 Tev or so. Experiments at LEP and the Tevatron have ruled out much of the energy range in which one expects to see something and the fine-tuning argument is already at the point of starting to be in conflict with experiment, for more about this, see a recent posting by Jacques Distler.

Last week at Davis I was suprised to hear Lenny Susskind attacking the fine-tuning argument, claiming that the distribution of possible supersymmetry breaking scales in the landscape was probably pretty uniform, so there was no reason to expect it to be small. He believes that the anthropic explanation of the cosmological constant shows that the “naturalness” paradigm that particle theorists have been invoking is misguided, so there is no valid argument for the supersymmetry breaking scale to be low.

I had thought this point of view was just Susskind being provocative, but today a new preprint appeared by Nima Arkani-Hamed and Savas Dimopoulos entitled “Supersymmetric Unification Without Low Energy Supersymmetry and Signatures for Fine-Tuning at the LHC“. In this article the authors go over all the problems with the standard picture of supersymmetry and describe the last twenty-five years or so of attempts to address them as “epicyclic model-building”. They claim that all these problems can be solved by adopting the anthopic principle (which they rename the “structure” or “galactic” or “atomic” principle to try and throw off those who think the “anthropic” principle is not science) to explain the electroweak breaking scale, and assuming the supersymmetry breaking scale is very large.

It’s not suprising you can solve all the well-known problems of supersymmetric extensions of the standard model by claiming that all effects of supersymmetry only occur at unobservably large energy scales, so all we ever will see is the non-supersymmetric standard model. By itself this idea is as silly as it sounds, but they do have one twist on it. They claim that even if the supersymmetry breaking scale is very high, one can find models where chiral symmetries keep the masses of the fermionic superpartners small, perhaps at observably low energies. They also claim that in this case the standard calculation of running coupling constants still more or less works.

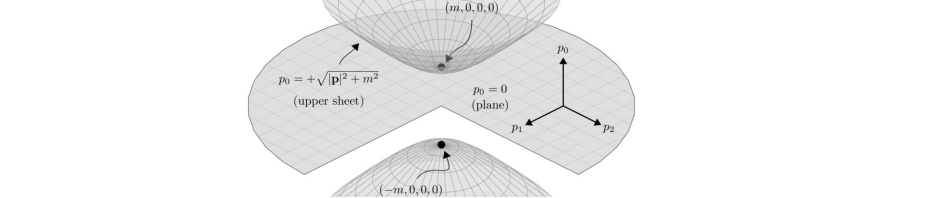

The main experimental argument for supersymmetry has always been that the running of the three gauge coupling constants is such that they meet more or less at a point corresponding to a unification energy not too much below the Planck scale, in a way that works much better with than without supersymmetry. It turns out that this calculation works very well at one-loop, but is a lot less impressive when you go to two-loops. Read as a prediction of the strong coupling constant in terms of the two others, it comes out 10-15% different than the observed value.

I don’t think the argument for the light fermionic superpartners is particularly compelling and the bottom line here is that two of the most prominent particle theorists around have abandoned the main argument for supersymmetry. Without the pillar of this argument, the case for supersymmetry is exceedingly weak and my guess is that the whole idea of the supersymmetric extension of the standard model is now on its way out.

One other thing of note: in the abstract the authors refer to “Weinberg’s successful prediction of the cosmological constant”. The standard definition of what a prediction of a physical theory is has now been redefined down to include “predictions” one makes by announcing that one has no idea what is causing the phenomenon under study.

To various people, I’m sorry, but I really don’t have to time to answer questions on QFT in a comments thread. You might want to consider posting to sci.physics.research. Anyways, various theories of SUSY breaking do have hidden sectors in which the breaking occurs and is then transmitted in some way to the visible sector. Finally, it’s a theorem that particles statistics are governed by one dimensional representations of the fundamental group of the configuration space of n points in space. For d>2, that’s the symmetric group and the only reps are the trivial and the antisymmetric one. In two dimensions, it’s the braid group which has all sorts of fun one dimensional representations. This gives what are called anyons.

To Peter, I continually wonder what physicists you hang out with. None of this stuff is secret. I was at a department wide talk which brought this stuff up in the past few months. Again, I’m rather curious what else you would have us tell the experimentalists to look for.

Hi Aaron,

I wasn’t under the impression that the degree of fine-tuning needed if the bound on the Higgs goes up by 5-10 Gev was such that it would cause people to give up on supersymmetry. If so, one might not even need to wait for the LHC if the luminosity at the Tevatron improves sufficiently.

There are at least two reasons why a lot more honesty about the failings of low-energy supersymmetry would be a healthy thing (and I don’t see a downside since I don’t think there’s any danger the experimentalists at the LHC won’t look for superpartners).

1. Experimentalists deserve an honest appraisal of how likely various possibilities are so they can make intelligent allocations of their resources.

2. The dishonesy and over-hyping of supersymmetry is part of a larger problem in particle theory. The amount of this in superstring theory/M-theory is vastly greater, and no experiments are likely to save us from this there. Theorists should start behaving a lot more like scientists, with a lot less hype and a lot more healthy skepticism about ideas that don’t work.

Hi Chris,

Sure, you can add other gauge groups, but if the particles we see have charges with respect to them, they’ll experience a new force and will behave differently, so there are all sorts of bounds on such a thing.

If you assume that the particles we know about are all uncharged with respect to the new gauge group, then it is a “hidden sector”, and its effects will be hard to observe (perhaps only through gravity).

Hi JC,

The “Planck Scale” is only the scale of quantum gravity if you assume that however you calculate the effective Newton’s constant, you get dimensionless numbers of order unity and dimensionful ones coming from the scale. If the calculation of the effective Newton’s constant contains an exponentially small dimensionless number, the scale could be quite different.

I don’t see any reason to try and get the standard model forces as induced from some other theory, but people have speculated that quantum gravity is induced from the other gauge forces. One intriguing fact about 2d WZW models is that they can be formulated as theories with a gauge (or loop group) symmetry, but the Sugawara construction shows they automatically also are non-trivial reps of the diffeomorphism group, so in some sense one is getting for free a theory of gravity from a theory with just a gauge symmetry.

On a different note, how do we know that the known elementary particles ONLY obey bose and fermi statistics? How do we know that they are NOT obeying more general parabose and parafermi statistics, or for that matter some other generalized permutation symmetry groups?

There is a point raised by JC that I have never heard discussed, but I feel ought to be taken seriously. If there was a “new” kind of force that conserved all the quantum numbers of (say) the Strong interaction, but was much weaker, how would we ever know about it? What is to stop me claiming that SU(3)xSU(3)xSU(2)xU(1) is the “real” theory of the world with my weak-strong interaction as the other SU(3)?

That should be

G = <T>

the expectation value of the stress energy tensor.

Just by dimensional analysis, the Planck scale is the scale where gravity becomes relevant. On the other hand, the Planck scale might not actually be what we think it is. Some theories with extra dimensions have the Planck scale and the weak scale coinciding.

The problem with keeping gravity classical is that you have to couple it to quantum stress energy. The obvious way to do that

G =

has problems. I’m open for suggestions on other ways to do it. Sakharov’s induced gravity gets things off by orders of magnitude as I remember it, but I don’t remember it very well.

Epicycles are completely irrelevant here. When you add epicycles, it means you’re adding parameters to your theory to account for the fact that the theory doesn’t fit the data. The standard model fits the data amazingly well.

There are theories where gravity unifies at the same point as the other forces. GUTs are going to have massive gauge bosons at the scale of the breaking. You can break large gauge groups in stages E8 -> E6 -> SO(10) …, for example. There’s all sorts of stuff that can happen, really.

The almost convergences of the couplings was not imposed in any form. It follows directly from the formulae.

Finally, I doubt the electroweak forces and QED are “induced” in any way (I’m not sure what that would mean, really.) The theories that we have for them now work just fine.

Aaron,

I never quite understood exactly why the scale for a quantum theory of gravity has to be taken at the Planck scale specifically. Are there any rigorous and/or mathematical arguments which shows that this always has to be the case? Why can’t the quantum gravity scale be at something like several million or billion TeV’s, or for that matter several billion or trillion times larger than the Planck scale itself? Is the Planck scale for quantum gravity just accepted on faith or by fiat decree?

Is there a possiblity that gravity is just strictly a classical force with no quantum counterpart? Are there any experiments that have confirmed any quantum gravity phenomena, such as the Unruh effect? (Would the Unruh effect even be a good candidate to search for in a tabletop experiment?) Is this belief in quantum gravity just an extrapolation of the three other forces being quantized? Could gravity just be nothing more than a “residual force” produced by higher order quantum effects, in the style of Sakhrov’s induced gravity?

On the surface, adding in more particles, symmetries, and other “junk” into the standard model feels a lot like adding in more “epicycles” and free parameters into an already grotesque looking theory. (Well, maybe not completely “grotesque” looking.) Could the electroweak and qcd forces be an “induced” force of some sort, in the spirit of Sakharov?

With the idea of three coupling constants merging at one point at some high energy scale, why doesn’t gravity also converge too to that exact same point as the other three coupling constants? Or for that matter if there’s a 5th or 6th force that we aren’t aware of yet, would their coupling constants also converge to this exact same point too? Is this convergence point just something that was noticed by running the coupling constants to higher energy scales, or was it imposed a priori by fiat decree?

There are a few answers to your question about the standard model. The most obvious is that it doesn’t incorporate gravity and we’ve got pretty good evidence that gravity exists, so any theory really ought to include it. One can ask at what energy scales does a quantum theory have to take gravity into account. The answer to that is approximately the Planck scale. That’s why it’s important.

The other answers are a bit more technical. One is that, as has been discussed a bit here, the standard model isn’t natural. There are two types of naturalness, actually. What it really comes down to, however, is that the scale of the electroweak interaction is much, much smaller than the Planck scale. We don’t know why this is true. This is dissatisfying.

There’s also technical naturalness which says that the Higgs mass is naturally at the Planck scale because of loop effects. Supersymmetry stops this from happening. So, why it doesn’t explain why the weak scale is so much smaller than the Planck scale, it does stabilize the Higgs mass.

A third reason to believe that there might be something beyond the standard model is that if you were to draw three random lines on a piece of paper, there’s little chance they’ll intersect at a simply point. Nonetheless, if you look at the runnings of the coupling in the standard model, you get three lines to come reasonably close to intersecting. This is a coincidence that is not explained by the standard model. It could just be random, of course, but it could also be a clue.

As for why finding just the Higgs would be depressing, where would we go, then? Building a larger accelerator is unlikely. It means that this dataless purgatory we currently inhabit is likely to last for the foreseeable future. That would suck.

If you don’t like fine tuning, then, as I understand it, there’s just not much more room for the Higgs left. Another 5 or 10 GeV and you’ve got issues. Are you telling me that other considerations give you the same bounds?

“I just think that if one is honest one sees that the GUT and low-energy supersymmetry ideas are failures. If you don’t acknowledge failure and keep promoting failed theories you’re not doing science anymore.”

Such pessimism. You can’t wait five more years for them to turn on the damn machine before you make these sweeping pronouncements? Even if you want to argue that, in your sense of the word, SUSY makes no predictions, I assume you agree that there are plenty of things that would strongly support SUSY if discovered at the LHC? Are we now not only theorizing without the aid of experiment, but also pronouncing theories abject failures before even attempting to test them?

Let the experimenters do their work. If they just see a Higgs, we all go home. If they see SUSY, then, presumably you admit it wasn’t a completely false direction to purse, and we start measuring the weak SUSY breaking parameters. If we see something else entirely unlike SUSY, we all go out and study that.

That’s the joy of experiment.

Are there any empirical reasons why the standard model is NOT the final answer to particle physics?

Or is it mainly ideological or philosophical reasons why people think the standard model is not the final answer?

Why would finding the Higgs particle be a depressing event? Could there be a 4th or 5th family of quarks/leptons heavier than the mass of the Higgs?

Why should we take the notion of a “Planck scale” energy seriously? Could it be possible that the “Planck energy” is just a product of several known physical constants which produces a “number” that has no physical relevance in the real world? Is there any empirical and/or fundamental reason why Newton’s gravitational constant is important at higher energy scales?

There are unitarity arguments that tell us something has to happen really soon. There are Higgsless models of EWSB. The ones I’ve heard about use boundary conditions in extra dimensions, but there could be others. I don’t want to say too much because I really don’t know much about them, but I’m pretty sure they predict something happening at LHC.

People might find this paper interesting as an outlook on what might come:

hep-ph/0312096

If you ask any particle physicist what lives in their nightmares about the next few years, the answer is this: that the LHC discovers a Higgs, and that’s it. Everything is completely described by the standard model. If that happens, we might just all have to go home.

The proliferation of undetermined parameters in supersymmetric GUTs comes not just from the supersymmetry breaking, but also from the GUT symmetry breaking (via the Higgs mechanism or whatever). In principle one can hope that some compelling model for supersymmetry breaking exists that predicts the 100 or so parameters, but the fact of the matter is that all pheonomenologically viable methods of supersymmetry breaking are complicated, contrived, and don’t do much in the way of fixing those parameters.

I really wish people would reserve the term “prediction” for its standard usage: one has a well-defined model in which something is calculable and you calculate it. In the standard supersymmetry picture, you don’t know what causes supersymmetry breaking or even what its scale is, and the mass of the Higgs is constrained only by the very vague “naturalness” principle (just how much fine-tuning is too much?).

Essentially all low-energy supersymmetry and naturalness are telling you is that the Higgs can’t be too heavy. But there are other reasons for that (Higgs sector becomes strongly coupled if the Higgs mass is too high), so it isn’t really telling you anything new.

If I had a successful extension of the standard model I’d be preparing my speech for Stockholm. I just think that if one is honest one sees that the GUT and low-energy supersymmetry ideas are failures. If you don’t acknowledge failure and keep promoting failed theories you’re not doing science anymore.

How would the picture of the standard model change if no Higgs particle is discovered for several hundred thousands of TeV above 1 TeV? What viable mechanisms could replace the Higgs in this case?

SO(10) also nicely accommodates neutrino masses. The dark matter candidate is also more robust than you indicate, I think. If you just write down general Lagrangian, it’s easy to find Flavor Changing Neutral Currents (FCNCs) from SUSY. One way to eliminate them is by imposing a symmetry called R-parity by fiat. This then necessitates a Lightest Supersymmetric Partner (LSP) that fits all the cosmological requirements for a WIMP.

The 100 or so parameters you refer to are in the weak SUSY breaking lagrangian. They really parametrize our ignorance. One expects that when the mechanism of SUSY breaking is understood (assuming low energy SUSY really exists), there will be tons of relations among the parameters of that lagrangian. It would probably work the other way around. We’d measure the parameters in the weak SUSY breaking lagrangian, discover relations and use that to better understand the mechanism of SUSY breaking. Regardless, the idea that there really are 100 (or whatever) free parameters isn’t really true.

I already gave you a prediction of SUSY: the light Higgs. That it hasn’t been seen is one reason, I think, that people are getting a bit more skeptical. Also, one would hope to see all sorts of fun stuff at the LHC.

No one’s claiming SUSY is perfect. But, before you knock it too much, what other extensions of the standard model do you propose people study? Because, believe me, people are always interested in something new.

Hi Aaron,

At some point soon I’ll try and write up a more detailed discussion of the pros and cons of supersymmetry. The issues have been around now for more than two decades and the only interesting change I’ve seen recently is that Witten is concerned enough about this to have given several talks in the past year or two discussing the problems with supersymmetry. Also,now Susskind, Arkami-Hamed and Dimopoulos seem willing to argue that the problems of low-energy supersymmetry are such that perhaps the idea should be abandoned.

The standard ideology is that the idea of low-energy supersymmetry combined with grand unification is, as you say, “very compelling”. I’ve always disagreed with this and continue to do so strongly. I think the only statement that can be defended is that the idea has aspects that are “suggestive”. The two best arguments for this ideology are:

1. The fact that the particles in one generation fit rather naturally into the SO(10) spinor representation.

2. The coupling constant unification, which is off by 10-15%, an amount one could hope to ascribe to threshold effects.

(A third standard argument, that in this framework there is a candidate particle that could be dark mattter, seems to me such a weak argument that I don’t think it is worth taking very seriously)

I don’t think either of these is “very compelling”, partly because they come at a huge cost. A supersymmetric GUT is a vastly more complex theory than the standard model with at least 10 times more undetermined parameters. In return for this huge increase in the complexity of the theory, one gets the measly return of the two points mentioned above and a huge number of problems one has to find some way of arguing away (too much baryon number violation, FCNC, CP violation, etc.)

The huge number of undetermined parameters and lack of any idea of what the supersymmetry breaking scale is mean that it is extremely hard to get a real prediction out of this framework. The closest thing to one would be that of proton decay, but even here a prediction is possible only in a very tenuous sense. For a generic choice of the undetermined parameters one gets proton decay faster than experimentally observed. One can choose parameters that make the rate much smaller, although there is a point at which you run into trouble doing this. So supersymmetric GUTs are consistent with a huge range of possible proton decay rates, which is not exactly much in the way of a prediction.

There’s no trial going on. As I’m sure you know, the standard model works depressingly well. SUSY extends it in useful ways. In addition to what I’ve mentioned it also provides a useful candidate for a WIMP. The fact that it does this, betters coupling unification and sort-of-solves the naturalness problem seems like pretty good reasons to look at it.

With the Higgs not found yet, that’s a strike against it, sure. But, why the opprobrium? It’s a very nice idea that may or may not be correct.

As for doing QFT rigorously, none of the directions that I’ve seen out there interested me all that much. I’m glad people out there are working on it, and I’m glad they aren’t me. In the meantime, QFT works pretty damn well as we use it, so I’m not too constrained.

Aaron –

One difference between a civil and a criminal case in English law is that the verdict is based on the “balance of probabilities” in a civil case, whereas “proof beyond reasonable doubt” is required in a criminal case. With SUSY in the dock, I would deliver the former verdict against it, but not the latter. To put it another way, I do not think that SUSY is leading anywhere good (and I am not sure that I ever did). Feel free to prove me wrong, though.

As for the Millenium Prize, the fact that it exists at all is proof enough of failure on all of our parts. We should not be relying on someone to provide a silver bullet to cure the elementary problems of QFT. More people should be working on solving these problems: not just a handful of German mathematicians. I did what I could – what did you do?

There are plenty of QFTs without Lagrangian descriptions. Look up minimal models in CFT, for example.

Also, of course SUSY has guided experimental searches. You have to trigger on something, and SUSY has been the most viable extension of the standard model for quite a while. I don’t think anyone would argue that it’s not worthwhile to look for. Experimentalists trigger on all sorts of other things however.

SUSY favors a light Higgs, by the way. (The tree level prediction is around the Z-mass, I think, which is what’s being referring to. Loop effects push it up.) In fact, the nondiscovery of the Higgs below 115 GeV or so has begun to push SUSY into the realm of fine tuning. Push it up too much more and the model loses a lot of its appeal.

The equation of string theory and SUSY is not a good idea. There are plenty of reasons to believe SUSY completely irrespective of strings. As you allude to, the two major ones are that it seems to protect the hierarchy of the weak scale versus the Planck scale (although I think this gets a bit iffy when you actually break the thing). The second is that, in the standard model, if you run the couplings up a ways, they almost unify, but not quite. If you add in SUSY, this agreement gets a lot better. If you believe that it’s too much a coincidence for the coupling to almost unify, then the fact that SUSY gets them closer is a big deal. Unfortunately, apparently, the two loop calculations spoil this a bit. Nonetheless, it’s still better than the standard model.

I’m rather surprised by the statement “The GUT and supersymmetry ideas have been intensively investigated, but don’t lead to any convincing predictions or compelling picture.” This is certainly false. The pictures are very compelling. The unification of the couplings points towards GUTs. The SO(10) GUT has a number of nice properties. GUTs also make predictions, the most important being proton decay. For this reason, the nonsupersymmetric SU(5) GUT is pretty much dead. IIRC, the supersymmetric SU(5) GUT isn’t doing so well, either, though there may be more room for fudging on that.

SUSY also makes predictions like the light Higgs and presents a very compelling picture. If you think that the only reason people work on supersymmetry is because string theorists need it, trust me when I tell you that the idea is laughable.

The fact is that if you can come up with an interesting extension of the standard model, people would be very interested in. Precision electroweak measurements strongly constrain what you can do. For example, I always thought technicolor was a very pretty way to resolve the hierarchy problem and get rid of the Higgs kludge. Unfortunately, it has also been almost completely killed by experiment.

I also want to note that I don’t think anyone has a problem with the infinities in individual diagrams in QFT. There are prescriptions which avoid this, but the regularization procedure both works and is understood. This is not to say that we understand QFT. I don’t think we do. I’d love to replace it with something better defined. There’s a million bucks waiting for you if you manage to do it, so good luck.

Lastly, I’m sort of astounded how you manage to draw such sweeping conclusions from such minor issues. You want to believe that the field is much more dogmatic than it really is. Nobody’s abandoned much of anything, much less “the main argument for supersymmetry”. In fact, what is being done is that people are exploring new directions. I would think that you would think that this is a good thing. For a long time, people thought that naturalness was a useful criterion for model building. If some version of the anthropic principle turns out to be correct, then we can abandon naturalness for certain (but not all) parameters. This opens up the possibility of new, possibly, interesting models to explore. That’s what Nima and Savas are doing.

I like exploration. Don’t you?

It looks increasingly likely that supersymmetry (and supergravity) has had its day and it is probably not a true symmetry of nature, even in broken form. Nature (or god if you prefer) is not obliged to follow the aesthetic ideals of mathematicians/physicists and might not even care about mathematical beauty/elegance as much as we do. If anything, this universe is looking more and more messy, inelegant and confusing! Personally though, I always found the idea of pairing off known bosons and fermions with superpartners like “squarks” artificial and I would bet money that none of

these “sparticles” are ever going to show up in any upcoming experiments. This is especially serious for string theory and “M-theory”, where supersymmetry is required of course in order to wield any analytical control (and to get the dimensions down to a mere 10.) It was the Green-Schwarz superstring that started the whole string craze back in 1984 after all. Before that nobody simply cared. Even if superparticles did turn up in future experiments it does’nt prove string theory but does make it more likely; but without supersymmetry somehow existing in nature the work of the past 20 years all crumbles–back to the much maligned old bosonic/tachyonic string in 26 dimensions. In effect string theory sinks like a lead hippopotamus without supersymmetry. Of course string theorists will conveniently take refuge in arguments that supersymmetry is manifest only at immense energies

just like they are now taking refuge in cop-out anthropic arguments. Supersymmetry and the consequent superstrings were indeed powerful and promising mathematical ideas and were worth pursuing, but are wearing a bit thin now after 20 years.

Is there a possibility that the only reason why quantum field theory and renormalization is “accepted” these days, is that nobody else has found a better theoretical framework which does NOT produce garbage calculations? It seems like any framework that increases the amount of calculational labor involved, just to get the same results as quantum field theory, largely gets ignored for the most part after awhile. Why bother with these “alternative” frameworks when they reproduce the same results (with many more times the labor involved) as ordinary quantum field theory?

If quantum theory is more “fundamental” than classical physics, why do we still use the framework of Lagrangian dynamics as a “stepping stone” to eventually do quantum field theory? Is this just another case of “nobody has found a better way to do things”? Are there any “intrinsic quantum” frameworks which do NOT involve the intermediate step of using Lagrangian dynamics with an “ad-hoc” quantization prescription?

Are there any better quantum entities to calculate, besides the S-matrix?

Should we even believe that quantum theory retains it’s present form, when we go to higher and higher energy scales?

One thing I’ve wondered about is how heavily the search for supersymmetry is biasing the data analysis or even experimental design of detectors. In the worst case it might affect the design of the triggers. Presumably experimentalists have a healthy enough skepticism about theory that they are likely to not believe in the supersymmetry picture too strongly.

Still, maybe they should be made aware of exactly how tenuous the argument for supersymmetry really is.

A recent worry I have got is to wonder if SUSY was even biasing the results of experiments. Some years ago, a small signal was detected for a charged Higgs at L3 in CERN in a quark channel. Regretly it was 69 GeV thus too low for MSSM. Fast, it was averaged with measurements in *lepton* channels so the effect went down from 3.5 to less than 2 sigmas; and then the next edition of the pdg claimed a 95%confidence level above 75 GeV… I am thinking that all this “manoeuvre” was justified only if the faith in SUSY was (is) so strong as to be sure that H+ runs well above the Z0 mass.

Hello Matthew,

We are digressing from the main topic, so when I have had a chance to collect my thoughts, I will answer you by e-mail.

One last thought in regard to the main topic: if we are marking the demise of supersymmetry, then I personally do not mourn its passing as like Twistor theory or Strings it is an interesting mathematical idea that never had much of a connection with physics. Superfields & superspace were interesting to study, but at the end of the day one ends up doing much the same things as before, only with less of a prospect of explaining reality.

As for mass hierarchies, this never bothered me anyway. Worrying about this problem, if it is a problem, is premature.

Hi Chris

Your points about QFT seem wrong to me… I’ll grant that there is no mathmatically rigorous construction, but each of the points you make don’t impact on that.

“1. It is only a theory of scattering. The

much-vaunted calculation of the anomalous

magnetic moment of leptons takes the Compton

scattering of a single photon as representing

the interaction of the lepton with an external

magnetic field without satisfactory

justification.”

Well the first justification is that it agrees to many decimal places 🙂 But more to the point, you can consider the scattering off of a heavy Dirac lepton (say the muon) and take the limit as the mass of the heavy lepton goes to infinity. As far as I know, there’s nothing wrong with that.

“2. No theory of bound states exists, so how can

one claim the prediction of the Lamb shift in a

single-electron atom as a success?”

This is simply untrue. One can extract energy levels, for example, using lattice field theory. There is an algorithmic way to extract bound state properties from the path integral, we just need big enough computers.

As for the Lamb shift, IIRC you start with Hydrogenic wave functions and consider perturbations to them, rather than the “textbook” plane wave states. Again, since the system is essentially non-relativistic this is justified.

“3. If, as seems to me to be likely, we need to

look beyond local field equations to cure the

infinities, then I would much rather not do it

with this framework. It is too messy,

complicated and self-contradictory.”

It’s unclear which infinities you’re worried about. The diagrammatic infinities are cured by renormalization. Thanks to Wilson (and others) we actually understand this fairly well.

Hi Erin,

Unfortunately I think the present situation is that we don’t have any good ideas about how to extend the symmetries of the standard model. The GUT and supersymmetry ideas have been intensively investigated, but don’t lead to any convincing predictions or compelling picture. Some new ideas are desperately needed.

Hi JC,

My own best guess about what will ultimately happen with QFT is that it will turn out that a certain class of them, including the one describing the real world, can be interpreted as a formalism for dealing with representations of certain infinite dimensional symmetry groups, including the gauge and diffeomorphism groups. The idea is that there is some fundamental geometric structure, it has an infinite dimensional symmetry group with a distinguished representation, and this is the underlying mathematical structure that the standard model QFT is related to.

I posted a preprint about this a year or two ago, which hasn’t attracted any interest, partly for the quite good reason that the ideas I discuss are too vague and ill-formed. I’m working on a much more specific version of all this that I think I more or less understand now in 1+1 d, hope to have something written about it this summer.

Hi Matthew,

Thanks for pointing out Bondi’s name – to be honest, I wasn’t sure if he was still alive or not (I always think of him being stuck in the era when Fred Hoyle was around), so I thought this was another Bondi, not the Bondi.

I’d forgotten about CMBR when I made my comment. Isn’t this evidence all a bit vague still?

After I read up on MOND (Modified Newtonian Dynamics), I became much more skeptical about dark matter. MOND certainly seems a viable alternative, and seems to be at a nascent stage where an empirical rule makes predictions which appear to agree in most cases with observed galactic rotation curves, although the physical origin of this law is presently unknown (but hey, we’ve been here before, with things like Balmer’s formula, etc., which do work and ultimately are consequences of a physically meaningful theory).

Aside from this, if supersymmetry is soon to be abandoned as you suggest, Peter, then what other ideas are there that might unify the coupling constants at high energy? I’m not aware of any, and without SUSY or other symmetry principles, how else does one extend the Standard Model?

Peter –

My own investigations do not contradict what you just said. But good old Feynman-Dyson perturbation theory is IMHO an obstacle to further progress. As well as the reasons given by me at (boring) length elsewhere, let me add –

1. It is only a theory of scattering. The much-vaunted calculation of the anomalous magnetic moment of leptons takes the Compton scattering of a single photon as representing the interaction of the lepton with an external magnetic field without satisfactory justification.

2. No theory of bound states exists, so how can one claim the prediction of the Lamb shift in a single-electron atom as a success?

3. If, as seems to me to be likely, we need to look beyond local field equations to cure the infinities, then I would much rather not do it with this framework. It is too messy, complicated and self-contradictory.

I do not think that quantum field theory is wrong per se, but higher standards of mathematical consistency need to be demanded.

Are there any promising candidates at the present time that could possibly replace the quantum field theory framework?

It appears ideas like SUSY, string theory, supergravity, Schwinger’s source theory, analytic S-Matrix theory, stochastic quantization, geometric quantization, Epstein-Glaser construction of the S-Matrix, Wightman’s axiomatic quantum field theory, Haag’s algebraic/local quantum field theory, loop quantum gravity, Isham’s category theory approach to quantum theory, Penrose’s twistor theory program, etc … all appear to be “stalled by potholes” of various sorts and are either going out of style and/or they only have a handful of supporters that nobody really pays attention to them outside of their circles.

It seems like anything that isn’t constrained by experimental data, becomes governed by trendiness and ideology. Perhaps Freeman Dyson’s quote is really telling:

“Physics is littered with the corpses of dead unified field theories.”

I don’t think we’ve reached a final understanding of what QFT exactly is, especially in 4d. But there is so much physics that the present QFT formalism correctly predicts and there is so much beautiful mathematics related to it, that I think there is something very right about the formalism. Whatever replaces it in the long term is likely to be so close to it that it will still be called QFT. So I think it is kind of a different situation than things like supersymmetry and string theory, where the whole concept will likely have to be thrown out.

I notice that QFT is on your list of “well-tested” theories.

What is well-tested is not quantum field theory. It is a calculational recipe centred around Feynman graphs, to which mathematically meaningless infinite subtractions are applied when pathological divergences show up.

“Quantum field theory” is too grandiose a name for this arbitrary and unattractive setup, and I when I hear mathematicians condoning it, I wonder whether we’ll ever progress beyond it.

I’m no expert on cosmology, but from everything I’ve seen the evidence for the standard picture of cosmology is a lot stronger than the authors of this statement are willing to concede. It seemed to me that the WMAP data was in close agreement with what was expected, and there was no reason for this to be true if the standard picture is completely wrong.

One problem is that it is unclear what is meant by the “Big-Bang model”. If you include all the conjectural stuff about inflation and dark matter, it probably is true that it hasn’t been convincingly tested, but the idea of an expanding universe does seem to have passed quite a few tests.

From my experience with string/supersymmetry, I’m inclined to be sympathetic to claims that there are problems with certain orthodoxies in physics. But I’ve also seen that there are quite a few people who reject not just the more speculative parts of physics, but large parts of well-tested theories also (e.g. rejecting not just string theory, but the standard model, QFT, even quantum mechanics). This statement sounds to me suspiciously like something that may be along those lines.

Well, I don’t know about that cosmology statment. There are some names of respected people I recognize on there (Bondi, for example). There are also a few crackpots though (Arp, Marmet and Lerner) who have been throughly debunked in the past.

The statement seems rather strong as well. Certianly the Big Bang theory has had lots of quantitative sucesses. The microwave background was a predicition, as was the abundence of light elements. I don’t think those have been seriously challenged (though I’m by no means up to date on the field).

As for dark matter, there are a few different observations which point to it. If I recall correctily they’re even roughly consistent. That is, if you determine the dark matter density by looking at galactic rotations and by cosmological observations they come out roughly the same.

I realize that this isn’t (perhaps) directly related to the topic under discussion here, but I’ve just come across this suprising open letter on big bang theory, at

http://www.cosmologystatement.org

and the first sentence of it made me think about string theory and supersymmetry, as theoretical proposals which may never yield observable or measurable predictions. I quote:

“The big bang today relies on a growing number of hypothetical entities, things that we have never observed– inflation, dark matter and dark energy are the most prominent examples,”

and

“What is more, the big bang theory can boast of no quantitative predictions that have subsequently been validated by observation.”

Are the signatories of this letter doing the right thing? (I myself only recognized the names Woodward and Narlikar amongst them.) Might there be similar statements in the future for supersymmetry and string theory?

Perhaps one supposedly finite theory would be N=4 super Yang-Mills, where the beta-function is zero, so there is no coupling constant renormalization. The superconformal invariance of the theory may eliminate the usual sources of infinities. I believe there are also similar examples with N=2 supersymmetry.

The other “finiteness” has to do with finiteness of Feynman diagrams at 1, 2, etc. loops. For a while people believed that N=8 supergravity might have all terms in its Feynman diagram expansion finite, but now the belief seems to be that at high enough order one gets infinities.

Your post prompted me to dig out my Supersymmetry notes from about 1982. One of the promises made by Peter West at the time was that Supersymmetric theories were “finite”. That would in itself be a reason for studying it, but the two pages I have on “Perturbation theory in Superspace” looks depressingly like the familar infinity-plagued Feynman-Dyson perturbation theory. I am wondering therefore whether this finiteness is mere wishful thinking. Do you or anyone else who might be reading this know more about this?